Subtitles for the Deaf & Hard of Hearing (SDH), often also referred to as either closed or open captions (CC or OC), differ in some ways from the translation subtitles commonly accompanying foreign language movies. Can you tell the difference and do you know the requirements on captions in the Accessibility Act? Read our detailed how-to guide to find out how to make professional captions in Beey which fulfill all necessary standards and are also easy to read for the audience.

Essential captioning practices

Captions (also sometimes called intralingual subtitles) are most commonly provided for people with hearing impairments who use them for comprehension support, or may be completely dependent on them for information. However, in recent years captions tend to be especially useful to hearing people who, for a variety of reasons, cannot or do not want to have sound on.

It’s a good idea to determine the target audiences for our captions or subtitles in advance. If you aim to make your content fully accessible to deaf people, you need to pay more attention to proofreading the transcription and setting the standardized subtitle parameters. Beey makes the whole process possible in an intuitive editor, all the way from source content upload to export.

Subtitles in Beey

Why should you try using Beey, a speech-to-text app, rather than a regular subtitling software? Beey uses automatic transcription of your recording to text to make an interactive transcript with timestamps and speaker separation to make editing easer. From that you can generate automatic subtitles and edit those to fit your needs: there is a waveform preview, drag & drop features, adjustable subtitle position, color, or font… Anything you would expect from a professional subtitling tool but with extra automation to save time and help you work more effectively.

Is this your first time hearing about Beey? Take a look at out introductory tutorial here.

Key terms in captioning

Challenges in captioning

- People with hearing impairments are dependent on accurate transcripts or subtitles as they cannot check the information presented in the audio track of the video. Captions should therefore contain non-speech sound information as well – background noise, tone of voice, change of speakers, and more.

- For a certain group of people with hearing impairments – deaf sign language users – English (and other national languages) is not the primary language; they have to learn it as a foreign language. Reading written text can therefore be more difficult for them than for hearing viewers. Captions may need to be shortened or rephrased in order to maintain the reading speed recommended by the standard.

- In some cases, especially for longer videos where the textual information plays a larger role than the image, an interactive transcript may be more appropriate for accessibility purposes than closed captioning.

If you want to pursue subtitling at a professional level, it is advisable to familiarize yourself with the detailed captioning standards of the relevant country or your broadcasting/producing client.

Some useful links:

– Netflix subtitles style guide

– Federal Communications Commission captioning directory (for the USA)

– European Accessibility Act (for EU)

How to make high quality closed captions

Transcript and proofreading

- Try to ensure the best possible sound quality (the person speaking into the microphone, speakers not talking over each other, no background noise or music, etc.) or video file quality (don’t compress the file size unnecessarily, don’t use overdubbed videos where the original audio track overlaps with the new one, etc.). The better the sound quality, the more accurate the transcription of the speech.

- Read through the transcribed text carefully and correct errors. Focus particularly on proper nouns, foreign words, punctuation and matching the speakers to their section of the dialogue.

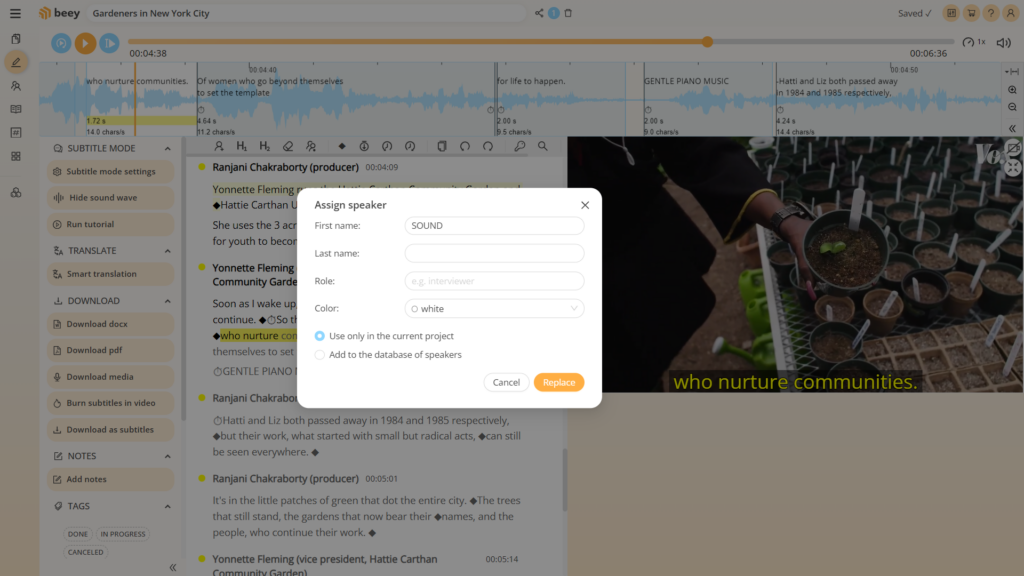

- For audiences of people with hearing difficulties, also mark non-speech sounds that are relevant to the content of the video and not obvious from the image (door slamming, dog barking, telephone ringing, song, etc.). These can be manually added to the transcript and assigned an artificial speaker called “(SOUND)” or something similar. Later on, in subtitle mode, these captions may be timed and color coded.

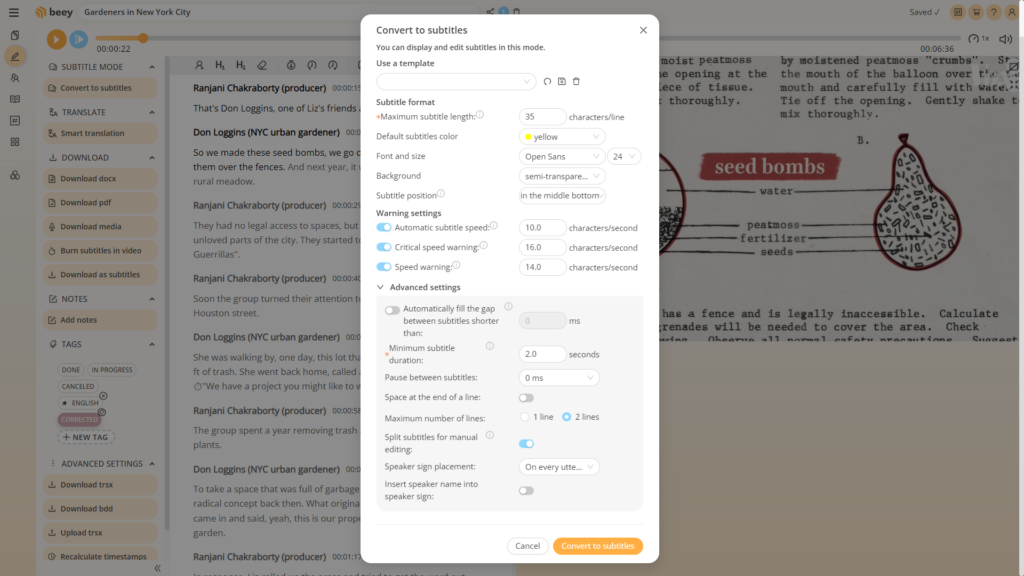

Caption settings

- Captions are automatically generated in subtitle mode. In the settings, set your required configuration. It is crucial for the deaf that the subtitles do not have too many characters per second – i.e. that the viewer has enough time to read the text. We recommend setting the automatic subtitle speed to 10-12 characters per second and the maximum speed warning to 14-16 characters per second.

- Set the minimum subtitle duration to 1.8 to 2 seconds. To help viewers recognize any changes of speakers reliably, set speaker markers at the beginning of each speech.

An example of parameter configuration:

Caption editing

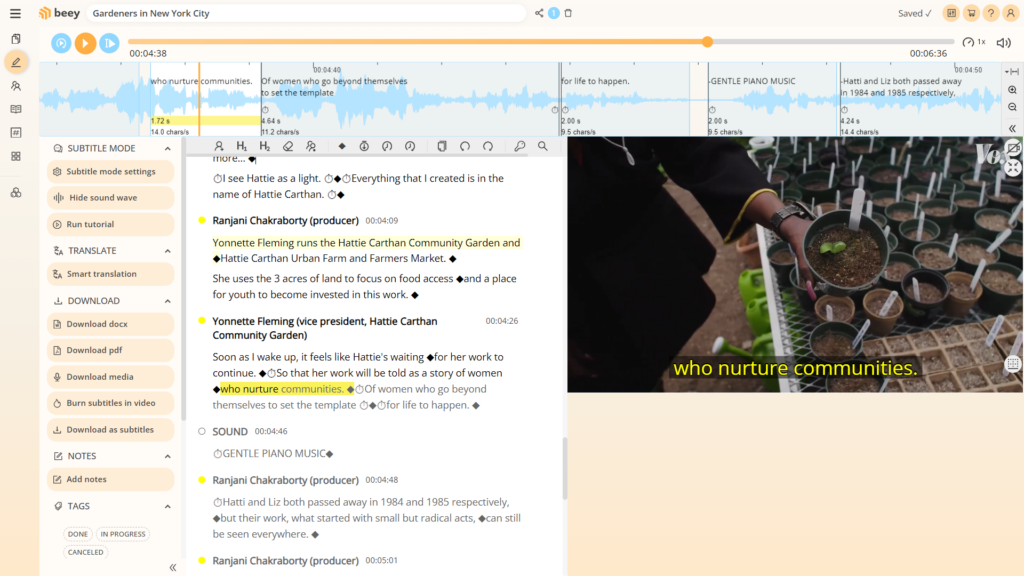

Automatically generated captions usually require some editing. In the screenshot below you can see that some of the subtitle segments are too long (highlighted in pink). We can manually split such sections with the Ctrl+B keyboard shortcut or the diamond button ◆ in the top control bar below the sound wave. Alternatively, we can also delete the separator character ◆ and split the caption elsewhere. Appropriate segmentation into logical sentence units makes reading subtitles much easier.

Captions that are too fast or too short can be adjusted by dragging the section on the sound wave preview at the top of the editor. Entire captions or just the boundaries of the beginning or end of given section may be edited in this way to ensure all captions are timed with precision. In some very “verbose” videos with not enough space for extending the caption, you might need to shorten the text to keep the caption from exceeding the maximum reading speed but preserving the original meaning.

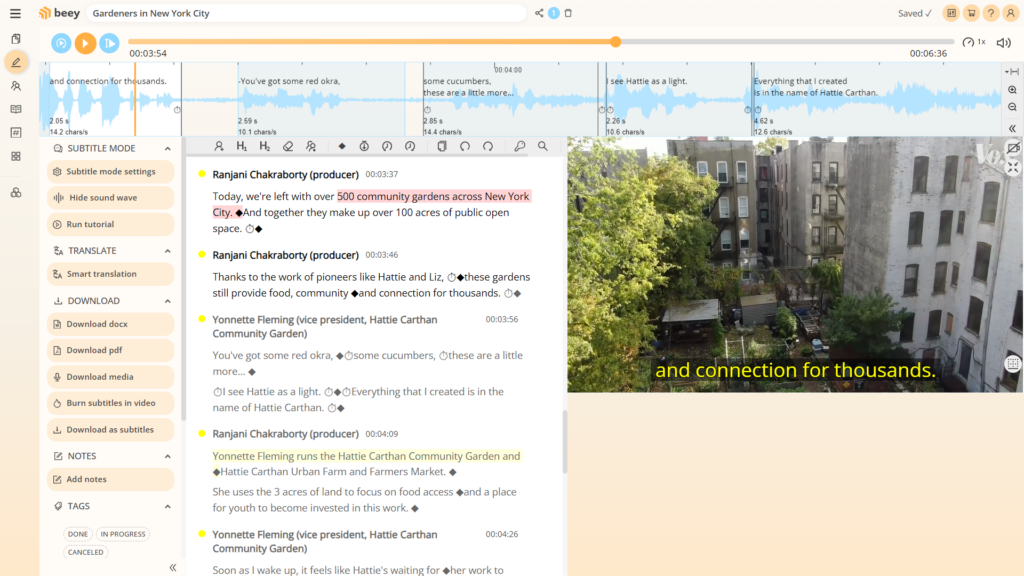

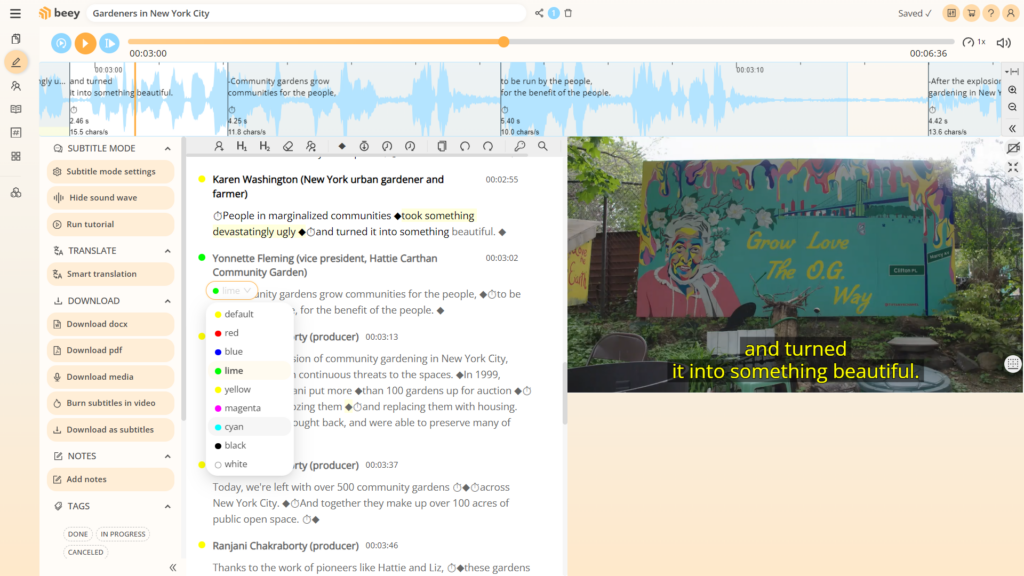

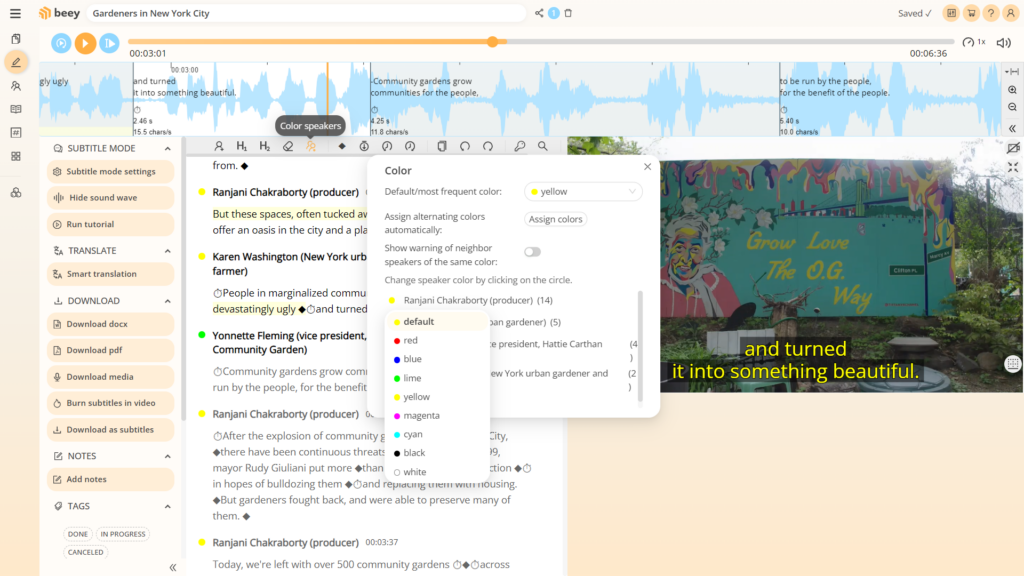

Once the captions are ready, we can label the speakers with colors for extra clarity. The standard default color is yellow, other easy to read colors for identifying the main speakers are lime, cyan and white. Non-verbal sounds are indicated by capital white letters. To select a color, either click on the circle next to the speaker name in the editor, or select the Color Speakers icon in the top menu. Recommended color settings and non-speech markers may differ by country or broadcasting provider.

The finished captions can be exported in several common formats (click Download as subtitles in the left menu) or burned directly into the video.