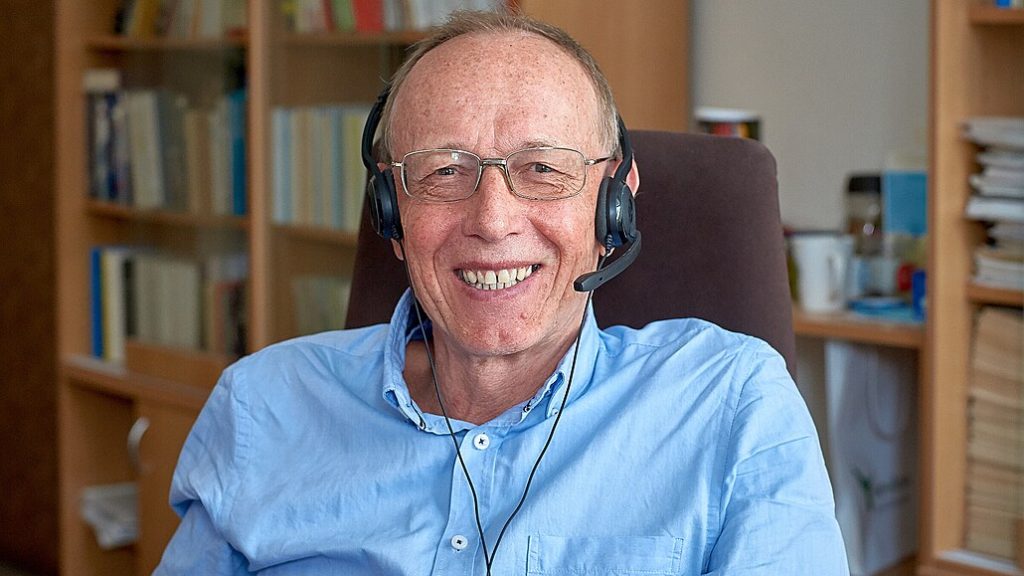

Have you ever wondered how speech-to-text conversion actually works? Beey\’s system is based on the technology developed at the Technical University of Liberec, one of our long time partners. The founder and original team leader of the Computer Speech Processing Laboratory (SpeechLab), prof. Ing. Jan Nouza, CSc., has given an interview to the news service iDNES.cz, in which he describes their system for automatic voice recognition in more detail. You can read an excerpt of this article, where the professor explains his part of the process, below.

Everyone can probably imagine what the field of computer speech processing deals with. But what is speech-to-text transcription actually used for and where?

It is used wherever spoken language predominates but the text needs to be stored or analysed. Such cases are dictating documents, radio and TV broadcas monitoring, or processing large spoken archives. For example, the Czech Radio has an enormous archive from the beginning of broadcasting in Czechoslovakia, and every day dozens more hours of its stations\’ broadcasting are added to it. Our programme allows quick searching through the archive and listening to what has been said immediately. Nowadays, call centres and justice courts have similar archives.

Professor at the Technical University of Liberec and founder of SpeechLab, a computer speech processing laboratory at the Institute of Information Technology and Electronics.

Our system is also of interest to state security forces, who may need to identify messages in tapped phone calls. In addition, our technology helps the disabled, such as people who cannot use their hands to operate the keyboard so they give commands by voice. For example, they can tell the mouse to move, dictate emails, search the Internet.

Your technology was also useful during the pandemic. In what way?

Especially in the early days of the pandemic, Newton Technologies used our recognition system to instantly create captions to important information on television for the deaf or to press conferences. They were able to get it up and running in a matter of days, which was really important at the time.

I know your system supports several languages. How difficult is it to add each language?

Twenty or thirty years ago, a recognition system had to be developed separately for each language. Gradually, however, the development has reached a stage where the core of the system remains the same and only the specifics of the particular language are developed for it, that is, the vocabulary, the individual phonemes and the language model. We started working on other languages about fifteen years ago, after we mastered Czech. First we focused on the closest ones, i.e. the Slavic languages. We worked on Slovak for about two years. However, we kept streamlining the process so the next language – Polish – took only a year. Then we started to add more languages thanks to our cooperation with Newton Technologies, who contacted us with requirements of their own. At the moment we can transcribe in twenty languages.

Do you need native speakers for your work?

Today we use neural network based methods, or machine learning, and that\’s what makes modern algorithms really smart. The most advanced neural networks can even learn to recognise words that are not in their dictionary. They learn from their mistakes, which they gradually eliminate, and as a result we no longer need native speakers or linguists. What we do need is a lot of data, and the more data these algorithms have, the better they learn.

The original unabridged interview by Gabriela Volná Garbová with prof. Nouza was published at iDNES.cz.